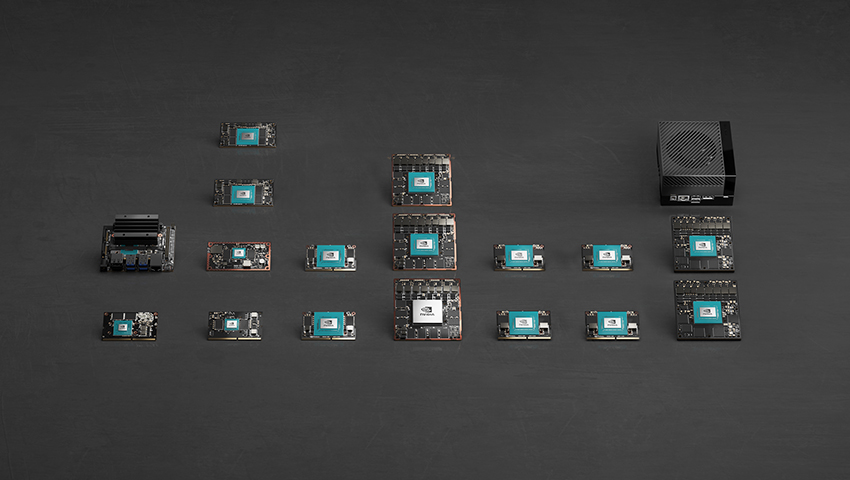

Jetson Modules

NVIDIA® Jetson™ brings accelerated AI performance to the Edge in a power-efficient and compact form factor. Together with NVIDIA JetPack™ SDK, these Jetson modules open the door for you to develop and deploy innovative products across all industries.

The Jetson family of modules all use the same NVIDIA CUDA-X™ software, and support cloud-native technologies like containerization and orchestration to build, deploy, and manage AI at the edge.

With Jetson, customers can accelerate all modern AI networks, easily roll out new features, and leverage the same software for different products and applications.

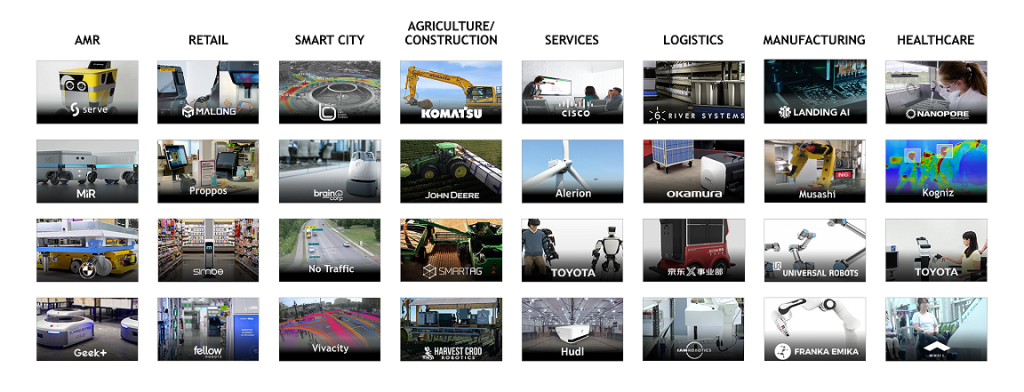

Edge AI Momentum

Jetson Support and Ecosystem

Jetson Support Resources

Detailed hardware design collateral, software samples and documentation, and an active Jetson developer community are here to help.

Support ResourcesJetson Ecosystem Partners

Get to market faster with software, hardware, and sensor products and services available from Jetson ecosystem and distribution partners.

Ecosystem Partners

| Jetson Nano | Jetson TX2 Series | Jetson Xavier NX Series | Jetson AGX Xavier Series | Jetson Orin Nano Series | Jetson Orin NX Series | Jetson AGX Orin Series | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TX2 NX | TX2 4GB | TX2 | TX2i | Jetson Xavier NX | Jetson Xavier NX 16GB | Jetson AGX Xavier | Jetson AGX Xavier 64GB | Jetson AGX Xavier Industrial | Jetson Orin Nano 4GB | Jetson Orin Nano 8GB | Jetson Orin NX 8GB | Jetson Orin NX 16GB | Jetson AGX Orin 32GB | Jetson AGX Orin 64GB | ||

| AI Performance | 472 GFLOPs | 1.33 TFLOPs | 1.26 TFLOPs | 21 TOPs | 32 TOPs | 30 TOPS | 20 TOPs | 40 TOPs | 70 TOPS | 100 TOPS | 200 TOPS | 275 TOPS | ||||

| GPU | 128-core NVIDIA Maxwell™ GPU | 256-core NVIDIA Pascal™ GPU | 384-core NVIDIA Volta™ GPU with 48 Tensor Cores | 512-core NVIDIA Volta™ GPU with 64 Tensor Cores | 512-core NVIDIA Ampere architecture GPU with 16 Tensor Cores | 1024-core NVIDIA Ampere architecture GPU with 32 Tensor Cores | 1024-core NVIDIA Ampere GPU with 32 Tensor Cores | 1024-core NVIDIA Ampere GPU with 32 Tensor Cores | 1792-core NVIDIA Ampere GPU with 56 Tensor Cores | 2048-core NVIDIA Ampere GPU with 64 Tensor Cores | ||||||

| GPU Max Frequency | 921MHz | 1.3 GHz | 1.12GHz | 1100 MHz | 1377 MHz | 1211 MHz | 625 MHz | 765 MHz | 918 MHz | 930 MHz | 1.3 GHz | |||||

| CPU | Quad-Core Arm® Cortex®-A57 MPCore processor | Dual-Core NVIDIA Denver 2 64-Bit CPU and Quad-Core Arm® Cortex®-A57 MPCore processor | 6-core NVIDIA Carmel Arm®v8.2 64-bit CPU 6MB L2 + 4MB L3 | 8-core NVIDIA Carmel Arm®v8.2 64-bit CPU 8MB L2 + 4MB L3 | 6-core Arm® Cortex®-A78AE v8.2 64-bit CPU 1.5MB L2 + 4MB L3 | 6-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 1.5MB L2 + 4MB L3 | 8-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 2MB L2 + 4MB L3 | 8-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 2MB L2 + 4MB L3 | 12-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 3MB L2 + 6MB L3 | |||||||

| CPU Max Frequency | 1.43GHz | Denver 2: 2 GHz Cortex-A57: 2 GHz | Denver2: 1.95 GHz Cortex-A57: 1.92 GHz | 1.9 GHz | 2.2 GHz | 2.0 GHz | 1.5 GHz | 2 GHz | 2.2 GHz | |||||||

| DL Accelerator | — | |||||||||||||||

| DL Max Frequency | — | |||||||||||||||

| Vision Accelerator | — | |||||||||||||||

| Safety Cluster Engine | — | 2x Arm® Cortex®-R5 in lockstep | ||||||||||||||

| Memory | 4 GB 64-bit LPDDR4 25.6GB/s | 4 GB 128-bit LPDDR4 51.2GB/s | 8 GB 128-bit LPDDR4 59.7GB/s | 8 GB 128-bit LPDDR4 (ECC Support) 51.2GB/s | 16 GB 128-bit LPDDR4x 59.7GB/s | 8 GB 128-bit LPDDR4x 59.7GB/s | 64 GB 256-bit LPDDR4x 136.5GB/s | 32 GB 256-bit LPDDR4x 136.5GB/s | 32 GB 256-bit LPDDR4x (ECC support) 136.5GB/s | 4GB 64-bit LPDDR5 34 GB/s | 8GB 128-bit LPDDR5 68 GB/s | 8 GB 128-bit LPDDR5 102.4 GB/s | 16 GB 128-bit LPDDR5 102.4 GB/s | 32 GB 256-bit LPDDR5 204.8 GB/s | 64GB 256-bit LPDDR5 204.8 GB/s | |

| Storage | 16GB eMMC 5.1 † | 16 GB eMMC 5.1 | 32 GB eMMC 5.1 | 16 GB eMMC 5.1 * | 32 GB eMMC 5.1 | 64 GB eMMC 5.1 | (Supports external NVMe) | 64GB eMMC 5.1 | ||||||||

| Video Encode | 1x 4K30 2x1080p60 4x1080p30 4x720p60 9x720p30 (H.265 & H.264) | 1x 4K60 3x 4K30 4x 1080p60 8x 1080p30 (H.265)1x 4K60 3x 4K30 7x 1080p60 14x 1080p30 (H.264) | 2x 4K60 | 4x 4K30 | 10x 1080p60 | 22x 1080p30 (H.265) 2x 4K60 | 4x 4K30 | 10x 1080p60 | 20x 108p30 (H.264) | 4x 4K60 8x 4K30 16x 1080p60 32x 1080p30 (H.265) 4x 4K60 8x 4K30 14x 1080p60 30x 1080p30 (H.264) | 2x 4K60 6x 4K30 12x 1080p60 24x 1080p30 (H.265) 2x 4K60 6x 4K30 12x 1080p60 24x 1080p30 (H.264) | 1080p30 supported by 1-2 CPU cores | 1x 4K60 | 3x 4K30 | 6x 1080p60 | 12x 1080p30 (H.265) 1x 4K60 | 2x 4K30 | 5x 1080p60 | 11x 1080p30 (H.264) | 2x 4K60 4x 4K30 8x 1080p60 16x 1080p30 (H.265)2x 4K60 4x 4K30 7x 1080p60 15x 1080p30 (H.264) | ||||||||

| Video Decode | 1x 4K60 2x 4K30 4x 1080p60 8x 1080p30 9x 720p60 (H.265 & H.264) | 2x 4K60 4x 4K30 7x 1080p60 14x 1080p30 (H.265 & H.264) | 2x 8K30 | 6x 4K60 | 12x 4K30 | 22x 1080p60 | 44x 1080p30 (H.265) 2x 4K60 | 6x 4K30 | 10x 1080p60 | 22x 1080p30 (H.264) | 2x 8K30 6x 4K60 12x 4K30 26x 1080p60 52x 1080p30 (H.265)4x 4K60 8x 4K30 16x 1080p60 32x 1080p30 (H.264) | 2x 8K30 4x 4K60 8x 4K30 18x 1080p60 36x 1080p30 (H.265)2x 4K60 6x 4K30 12x 1080p60 24x 1080p30 (H.264) | 1x 4K60 (H.265) 2x 4K30 (H.265) 5x 1080p60 (H.265) 11x 1080p30 (H.265) | 1x 8K30 | 2x 4K60 | 4x 4K30 | 9x 1080p60| 18x 1080p30 (H.265) 1x 4K60 | 2x 4K30 | 5x 1080p60 | 11x 1080p30 (H.264) | 1x 8K30 3x 4K60 6x 4K30 12x 1080p60 24x 1080p30 (H.265)1x 4K60 3x 4K30 7x 1080p60 14x 1080p30 (H.264) | ||||||||

| CSI Camera | Up to 4 cameras12 lanes MIPI CSI-2D-PHY 1.1 (up to 18 Gbps) | Up to 5 cameras (12 via virtual channels)12 lanes MIPI CSI-2D-PHY 1.1 (up to 30 Gbps) | Up to 6 cameras (12 via virtual channels)12 lanes MIPI CSI-2D-PHY 1.2 (up to 30 Gbps) | Up to 6 cameras (24 via virtual channels)14 lanes MIPI CSI-2D-PHY 1.2 (up to 30 Gbps) | Up to 6 cameras (36 via virtual channels)16 lanes MIPI CSI-2 | 8 lanes SLVS-ECD-PHY 1.2 (up to 40 Gbps)C-PHY 1.1 (up to 62 Gbps) | Up to 6 cameras (36 via virtual channels)16 lanes MIPI CSI-2D-PHY 1.2 (up to 40 Gbps)C-PHY 1.1 (up to 62 Gbps) | Up to 4 cameras (8 via virtual channels***) 8 lanes MIPI CSI-2 D-PHY 2.1 (up to 20Gbps) | Up to 6 cameras (16 via virtual channels**)16 lanes MIPI CSI-2D-PHY 2.1 (up to 40Gbps) | C-PHY 2.0 (up to 164Gbps) | ||||||||

| PCIE* | 1 x4 (PCIe Gen2) | 1×1 + 1×2 (PCIe Gen2) | 1 x1 + 1 x4 OR 1 x1 + 1 x1 + 1 x2 (PCIe Gen2) | 1×1 (PCIe Gen3, Root Port) + 1×4 (PCIe Gen4, Root Port & Endpoint) | 1 x8 + 1 x4 + 1 x2 + 2 x1 (PCIe Gen4, Root Port & Endpoint) | 1 x4 + 3 x1 (PCIe Gen3, Root Port, & Endpoint) | 1 x4 + 3 x1 (PCIe Gen4, Root Port & Endpoint) | Up to 2 x8 + 2 x4 + 2 x1 (PCIe Gen4, Root Port & Endpoint) | ||||||||

| USB* | 1x USB 3.0 (5 Gbps) 3x USB 2.0 | 1x USB 3.0 (5 Gbps) 3x USB 2.0 | up to 3x USB 3.0 (5 Gbps) 3x USB 2.0 | 1x USB 3.2 Gen2 (10 Gbps) 3x USB 2.0 | 3x USB 3.2 Gen2 (10 Gbps) 4x USB 2.0 | 3x USB.3.2 Gen2 (10.Gbps) 3x USB 2.0 | 3x USB 3.2 Gen2 (10 Gbps) 4x USB 2.0 | |||||||||

| Networking* | 1x GbE | 1x GbE | 1x GbE, WLAN | 1x GbE | 1x GbE | 1x GbE | 1x GbE | 1x GbE 1x 10GbE | ||||||||

| Display | 2 multi-mode DP 1.2/eDP 1.4/HDMI 2.01 x2 DSI (1.5Gbps/lane) | 2 multi-mode DP 1.2/eDP 1.4/HDMI 2.01 x2 DSI (1.5Gbps/lane) | 2 multi-mode DP 1.2/eDP 1.4/HDMI 2.02 x4 DSI (1.5Gbps/lane) | 2 multi-mode DP 1.4/eDP 1.4/HDMI 2.0 | 3 multi-mode DP 1.4/eDP 1.4/HDMI 2.0 | 1x 4K30 multi-mode DP 1.2 (+MST)/eDP 1.4/HDMI 1.4** | 1x 8K60 multi-mode DP 1.4a (+MST)/eDP1.4a/HDMI 2.1 | 1x 8K60 multi-mode DP 1.4a (+MST)/eDP1.4a/HDMI 2.1 | ||||||||

| Other IO | 3x UART, 2x SPI, 2x I2S, 4x I2C, GPIOs | 3x UART, 2x SPI, 4x I2S, 4x I2C, 1x CAN, GPIOs | 5x UART, 3x SPI, 4x I2S, 8x I2C, 2x CAN, GPIOs | 3x UART, 2x SPI, 2x I2S, 4x I2C, 1x CAN, PWM, DMIC & DSPK, GPIOs | 5x UART, 3x SPI, 4x I2S, 8x I2C, 2x CAN, PWM, DMIC, GPIOs | 3x UART, 2x SPI, 2x I2S, 4x I2C, 1x CAN, DMIC & DSPK, PWM, GPIOs | 4x UART, 3x SPI, 4x I2S, 8x I2C, 2x CAN, PWM, DMIC & DSPK, GPIOs | |||||||||

| Power | 5W – 10W | 7.5W – 15W | 10W | 20W | 10W – 20W | 10W – 30W | 20W – 40W | 5W – 10W | 7W – 15W | 10W – 20W | 10W – 25W | 15W – 40W | 15W – 60W | ||||

| Mechanical | 69.6 mm x 45 mm260-pin SO-DIMM connector | 87 mm x 50 mm400-pin connectorIntegrated Thermal Transfer Plate | 69.6 mm x 45 mm260-pin SO-DIMM connector | 100 mm x87 mm699-pin connectorIntegrated Thermal Transfer Plate | 69.6mm x 45mm260-pin SO-DIMM connector | 100mm x 87mm 699-pin connector Integrated Thermal Transfer Plate | ||||||||||

| TX2 NX | TX2 4GB | TX2 | TX2i | Jetson Xavier NX | Jetson Xavier NX 16GB | Jetson AGX Xavier | Jetson AGX Xavier 64GB | Jetson AGX Xavier Industrial | Jetson Orin Nano 4GB | Jetson Orin Nano 8GB | Jetson Orin NX 8GB | Jetson Orin NX 16GB | Jetson AGX Orin 32GB | Jetson AGX Orin 64GB | ||

| Jetson Nano | Jetson TX2 Series | Jetson Xavier NX Series | Jetson AGX Xavier Series | Jetson Orin Nano Series | Jetson Orin NX Series | Jetson AGX Orin Series | ||||||||||

† The Jetson Nano and Jetson Xavier NX modules included as part of the Jetson Nano developer kit and the Jetson Xavier NX developer kit have slots for using microSD cards instead of eMMC as system storage devices.

* USB 3.2, MGBE, and PCIe share UPHY Lanes. See the Product Design Guide for supported UPHY configurations.

** See the Jetson Orin Nano Series Data Sheet for more details on additional compatibility to DP 1.4a and HDMI 2.1

*** Virtual Channels for Jetson Orin NX and Jetson Orin Nano are subject to change

Refer to the Software Features section of the latest NVIDIA Jetson Linux Developer Guide for a list of supported features.

Jetson Module Lineup

Jetson AGX Orin Series

Bring your next-gen products to life with the world’s most powerful

AI computer for energy-efficient autonomous machines. Up to 275 TOPS and

8X the performance of the last generation for multiple concurrent AI

inference pipelines, and high-speed interface support for multiple

sensors make this the ideal solution for applications from manufacturing

and logistics to retail and healthcare.

Jetson Orin NX Series

Experience the world’s most powerful AI computer for autonomous power-efficient machines in the smallest Jetson form factor. It delivers up to 5X the performance and twice the CUDA cores of NVIDIA Jetson Xavier™ NX, plus high-speed interface support for multiple sensors. With up to 100 TOPS for multiple concurrent AI inference pipelines, Jetson Orin NX gives you big performance in an amazingly compact package.

Jetson Orin Nano Series

NVIDIA® Jetson Orin™ Nano series modules deliver up to 40 TOPS of AI performance in the smallest Jetson form-factor, with power options between 5W and 15W. This gives you up to 80X the performance of NVIDIA Jetson Nano™ and sets the new baseline for entry-level Edge AI.

Jetson AGX Xavier Series

Jetson AGX Xavier series modules enable new levels of compute density, power efficiency, and AI inferencing capabilities at the edge. Jetson AGX Xavier ships with configurable power profiles preset for 10W, 15W, and 30W, and Jetson AGX Xavier Industrial ships with profiles preset for 20W and 40W. These power profiles are switchable at runtime, and can be customized to your specific application needs. These modules have 10X the energy efficiency and 20X the performance of Jetson TX2.

Jetson Xavier NX Series

Jetson Xavier NX brings up to 21 TOPs of accelerated AI computing to the edge in a small form factor module. It can run multiple modern neural networks in parallel and process data from multiple high-resolution sensors—a requirement for full AI systems. Jetson Xavier NX is production-ready and supports all popular AI frameworks.

Jetson TX2 Series

The Jetson TX2 series of modules provide up to 2.5X the performance of Jetson Nano. These bring power-efficient embedded AI computing to a range of use cases, from mass-market products with the reduced form-factor Jetson TX2 NX to specialized industrial environments with the rugged Jetson TX2i.

Jetson Nano

Jetson Nano is a small, powerful computer for embedded AI systems and IoT that delivers the power of modern AI in a low-power platform. Get started fast with the NVIDIA Jetpack SDK and a full desktop Linux environment and start exploring a new world of embedded products.

Jetson Orin Nano Series

NVIDIA® Jetson Orin™ Nano series modules deliver up to 40 TOPS of AI performance in the smallest Jetson form-factor, with power options between 5W and 15W. This gives you up to 80X the performance of NVIDIA Jetson Nano™ and sets the new baseline for entry-level Edge AI.